This is a full-stack application with React and a FastAPI back-end. The main features of the application I announced in this post are done. To track progress and see the results so far, view the project repository on GitHub here.

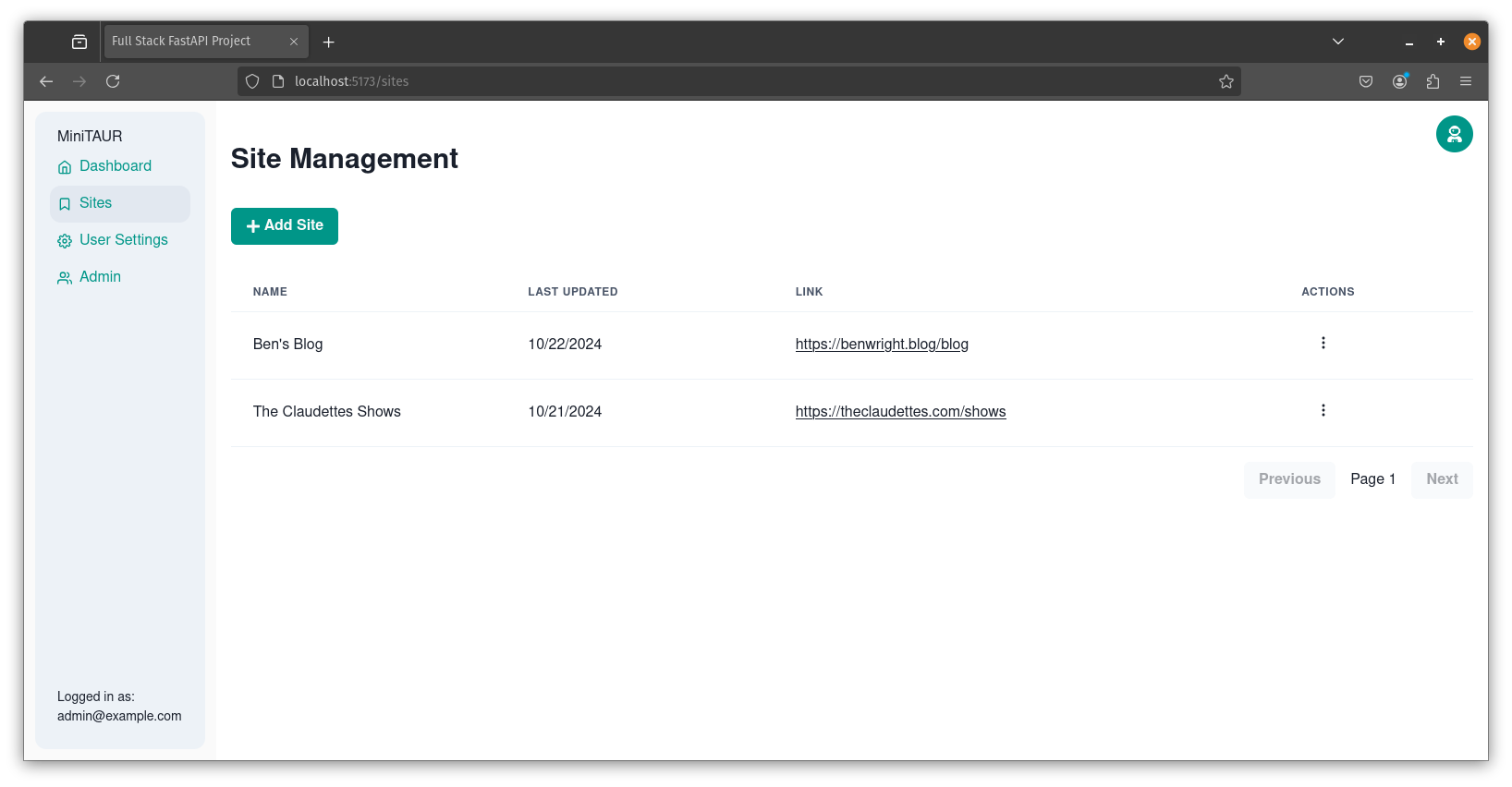

The app is meant to be a one-stop-shop for seeing which of your favorite websites have updates. The idea is instead of opening 20 bookmarks and scrolling for updates (cross-referencing with your calendar), you get a one-look-sneak-peek for which have updates – and a one-click-to-open option for those links. So far, I’ve added about 1,000 lines of code to the app’s open-source template. That included adding data models for websites, APIs for updating the database, and UI for managing the websites. Check out a screenshot below:

Now I can see when my favorite bands announce a new show or my favorite blog makes a new post!

Reflections

There’s a lot of room to grow. I want to add the option to sort pages based on their last update. That would be more React development. Or sort based on most frequently clicked links – which would take database updates as well as new UI. Also, I would like to highlight sites with recent updates by default, allow users to tag sites with categories, and filter based on those tags. The app is working now, but I love adding those new full stack features to a project!

This type of full stack application development with React aligns with my years of enterprise experience developing applications for the web. But for larger applications, I’d put a lot more work into asking users the features that they want that are important to them. Maybe filtering on tags isn’t so important to most people. Maybe they’d prefer a browser extension like a bookmark manager instead of a React-based web interface. But for now, this is for my own personal and educational use. Although, please contact me or leave a comment below if you think this could help you!

Bonus Topic: Continue & Ollama

Part of why I’ve been able to make rapid progress on this project is my use of AI copilots that are helping me to write code. The copilot extension, Continue; the LLM platform, Ollama; and the AI models themselves, such as Codellama are all open-source. Also, since Ollama runs the AI models locally on your computer, I am able to run models without a subscription or cost other than power to my GPU. (And I also shut off telemetry. Meaning, this is a viable technology stack for organization with propriety information or privacy concerns.) Overall, these smaller local models miss a lot of context. If I tell them, “add create, read, update, and delete methods for a new class based on SQLModel,” they will write 80% of the code required. But it’s not functional, and it will often misspell or forget certain variables required by the context.

The local AI models have been very good at writing helper functions such as make sure this URL starts with https://. But there are three areas where they help the most. 1. Helping me brainstorm the scope of changes required for a feature with chat functionality. 2. Auto-completing lines of code I am writing, saving me 50% of my typing. And 3. Explaining error messages and referencing documentation to help fix issues. That speeds up debugging and investigation a lot!

All that together, the AI keeps the coding momentum going, taking care of small details so I focus on the larger tasks and bringing all the pieces of full-stack development together.